Searching for the Commons of OSHW

Open Hardware has the potential to change the way we make and maintain our world - but we need to learn how to put our heads together to do it.

This post contains ideas that I’ve been bothering my friends about in conversation over the course of many years, and whose inputs (and prior works!) have been hugely valuable, so, shoutouts to Nadya, Sam, Anna WB, Quentin, Leo, Ilan, and many more - I am lucky to have found myself in this community of passionate folks!

The Question of Open Hardware’s Sustainability

Open Hardware is facing a small crisis; we have long standing incumbents who feel ripped off and a simmering of similar sentiments in the community. Many companies that were “open by default” are reconsidering or switching to hybrid and closed-source models as they discover that cost-competing with global manufacturers is difficult to do whilst also maintaining R&D, Documentation and Support roles. Indeed, many of these companies find themselves working to support users who have purchased their designs from some third party vendor.

At the same time, it was always the point that Open Hardware meant Open Hardware: unprotected designs that could be replicated elsewhere. Until now, OSH businesses were relying mostly on a community ethos to survive; hoping that buyers would choose to purchase from the firms who published the original designs1. This was based on a shared understanding that everyone benefits from open designs and interoperability, and in the FDM world that ethos worked for a long while, ushering in a “next generation” of machines that are radically more reliable, easy to use, and faster than the prior art.

Many of the hard-won insights uncovered by the Open Source FDM community have been recently deployed in the hugely successful (but closed-source) Bambu P1P, causing some to question the viability of open development practices in a field where incremental costs are non-zero. In this write-up, I want to show how we can have our cake (shared knowledge, community of practice, and Open Source) and eat it too (making profits, making complex systems, and making them easy to use and understand).

This level of refinement is the kind of engineering that normally takes place within “real” firms and behind closed doors and with centralized coordination and control of development. With the newest FDM generation, we saw that Open Hardware can do what Open Software has been doing for a while: replace centralized design of complex systems with distributed groups of individuals working asynchronously with no real coordination other than a common goal. The result of the FDM community’s work is a profound drop in cost (towards $200) and radical improvement to the quality of machines that just about anyone can buy and use in their own home to build designs of their making… it is worth a moment to appreciate what a great outcome that is.

But in some way, the FDM community has been done in by its own success: the dominant design patterns and technologies that have emerged from their hard work have become ubiquitous enough that the average FDM user doesn’t recognize where they came from, and so they simply purchase the best and the cheapest incantation of these designs that they can get their hands on. Manufacturers are responding, and it seems that as soon as one participant breaks the game-theoritic trance and develops open designs in closed source products, the ethos threatens to dissolve.

On the Flip

At the same time, Open Hardware is showing huge promise: during COVID-19 we saw collective action where individuals turned their FDM machines into PPE factories. Digital Fabrication is continuing to make its way into STEM education, and a new generation of young engineers is growing up in a land filled with Arduinos, Breadboards, Fritzing and FreeCAD. Every year it seems like one or two new Open Hardware Machine Companies emerge; this year we got an excellent pick and place machine, 2024 may be the year we finally get ‘the prusa of milling’.

Organizations like OSHWA and Hackaday / Crowdsupply and Hackster are expanding, and scientists and academics are increasingly turning to OSH in order to increase accessibility of experimental hardware. GOSH has emerged around Open Hardware in Science and organizations like BOING are building open instrumentation, an effort that the CBA (where I work) is also contributing to… we imagine a future where scientists who publish data also ubiquitously publish designs, algorithms, and controllers for their experimental hardware - and where that hardware is easily sourced, assembled, and commissioned so that others can re-create and expand the frontier.

What’s the Vision, and What’s the Point?

What’s at stake is, in my opinion, the most important quality of the whole human project; our ability to put our heads together and make meaningful stuff - to build off of one anothers’ intellectual outputs as we pursue our personal and common goals.

For a long time, the dominant narrative around the design and production of complex things (engineering artefacts, let’s say) was that it took place within firms, was mostly proprietary, and that the outcomes were protected by patents. This has never been completely true2, but Open Source Software blew it entirely out of the water as it came of age in the late 90’s / early aughts. Open Software completely changed the way we write code, and to have similar impacts in the realm of real stuff would be meaningful in ways I probably do not have to explain at length: with hardware we’re talking about healthcare, housing, agriculture, transportation, energy, science… not just apps.

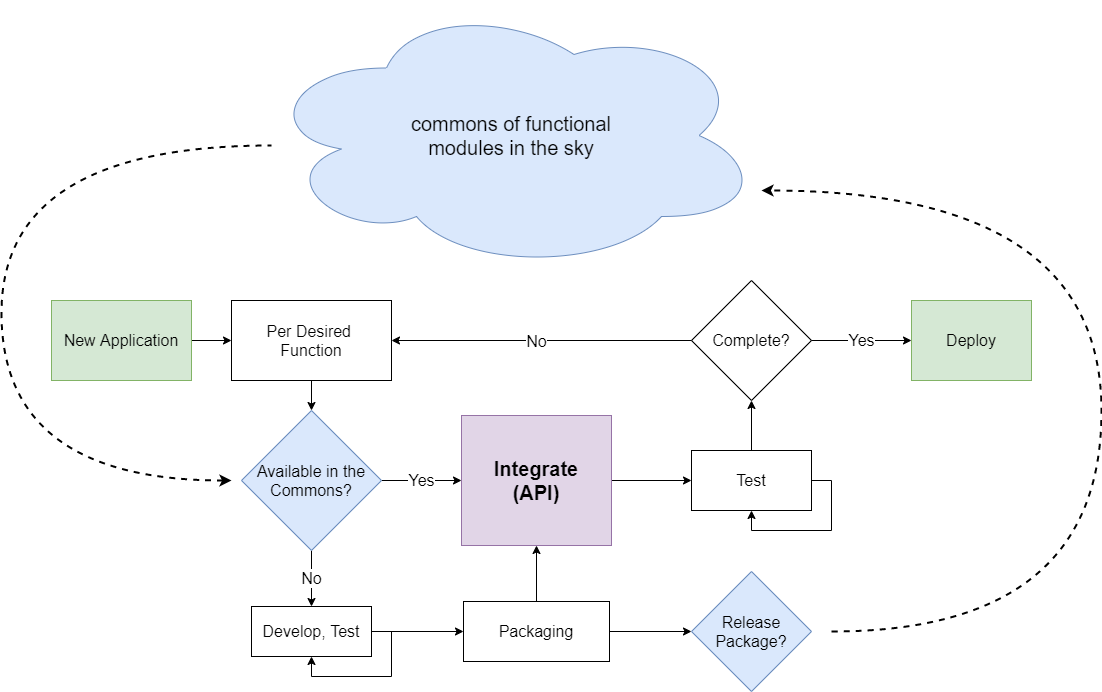

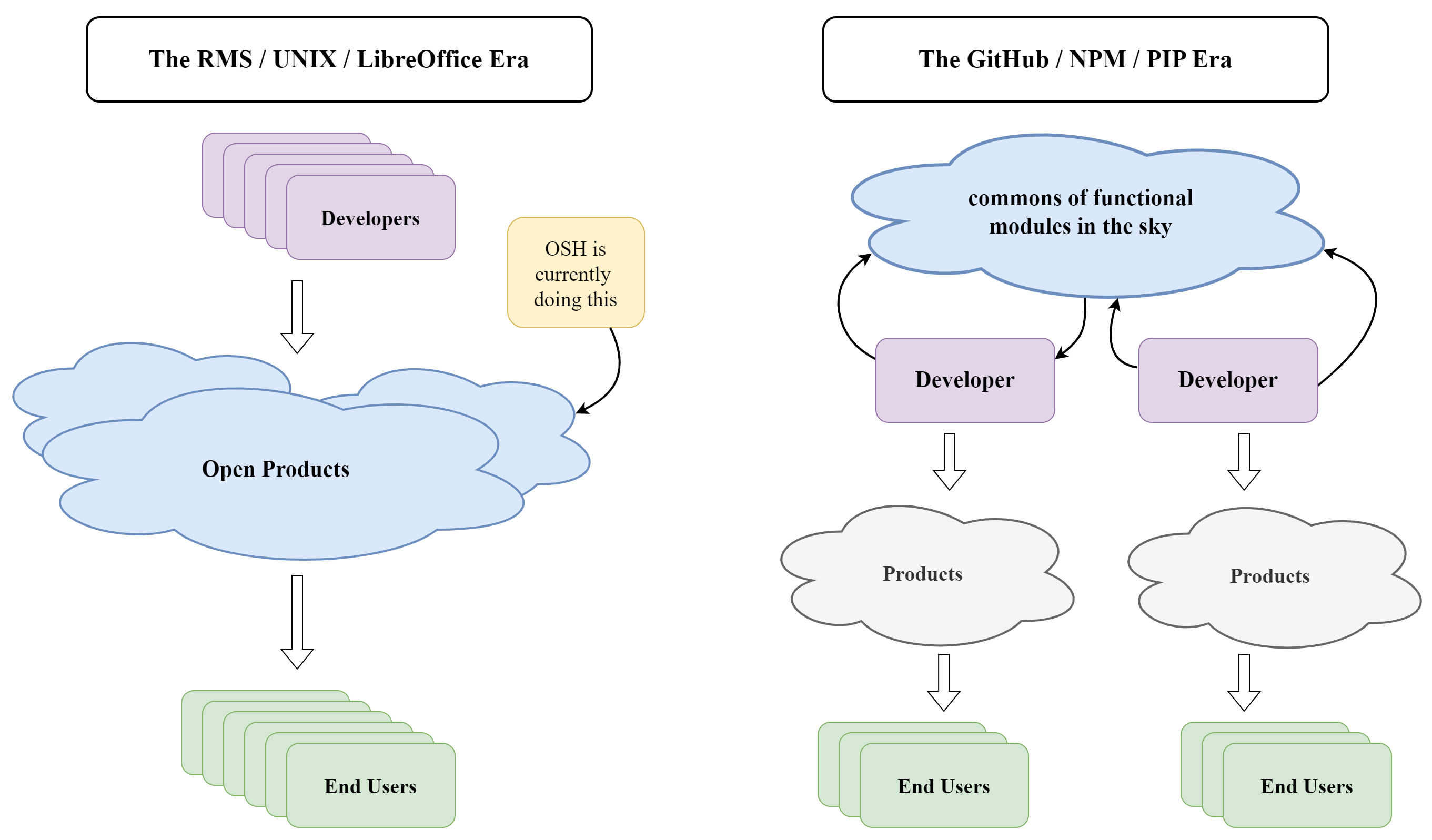

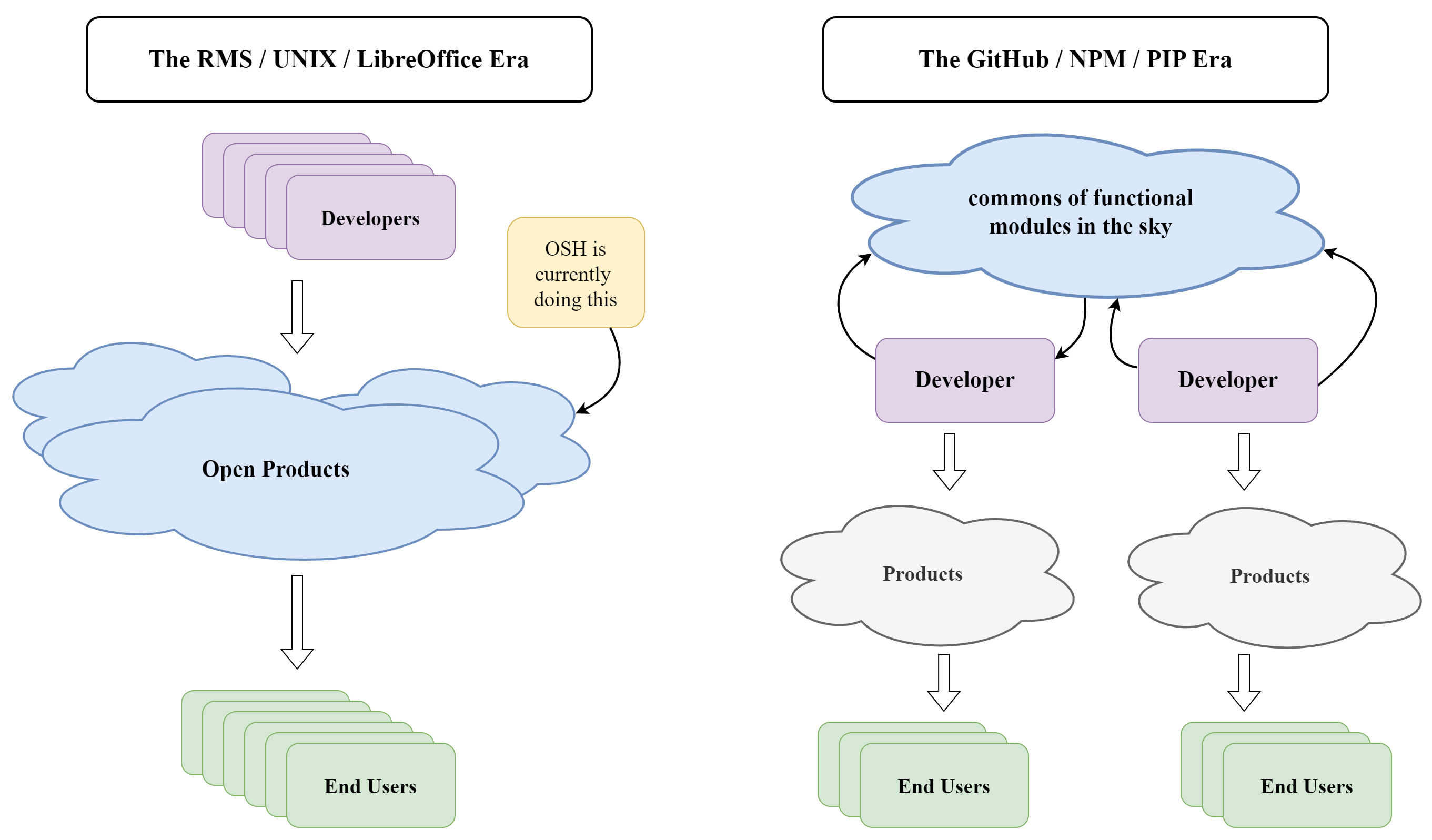

What made Open Software so powerful was a reorganization that started as recently as 2008; it transitioned from a focus on monolithic, end-user facing products (like LibreOffice and Linux) to a commons of functional modules that are rapidly re-assembled into application specific tools. I think that Open Hardware is on the brink of a similar transition, and that it can lead us to a world where we can have our cake (interoperability, shared community of practice, open designs) and eat it too (making profits, making complex systems, making them easily understood and replicatable, and making sustainable hardware companies).

What’s in This Post

In this post, I want to start exploring this idea of modularity in Open Source, looking in particular at well-developed theory from the sphere of Open Source Software. The goal is to learn what we might apply from that domain into ours, in Hardware. This breaks down into a few sections:

(1) Some motivating thoughts, exemplary failures, and historical lessons from interoperable computer hardware.

(2) Insights from economists and practitioners of the (hugely successful) Open Source Software ecosystems: how do they work and how do they emerge?

(3) Understanding key technical properties of the commons.

This review should elucidate the core theory around Commons Based Peer Production, and ground it in a discussion of hardware modules. Over the next year, I want to follow this up with two or three more posts; one that provides an overview of the “state of the commons” in open hardware, a second that proposes device-to-device interoperability as a way forwards towards an even more productive commons, and perhaps a third that goes into more detail on the foundational economics and imagines business models that could thrive in the commons.

1. From Monoliths to Modules

“Hardware is Hard” is an adage that gets thrown around a lot in the Open Hardware community, but I don’t think it needs to be this way. The utterance normally comes from folks who are accustomed to writing software and then find themselves in the wee hours of the morning trying to reconcile their circuit schematic with a sensor’s datasheet and someone else’s pinout diagram. In software development, we don’t have to sweat these details: libraries and lower layers are successfully encapsulated into (mostly) well-designed APIs, and we simply invoke them with function calls in our favourite language. The result is that we get to use tens or hundreds of other developers’ expertise in our own projects at a low economic and intellectual cost: we don’t pay for them, nor do we have to invest hours of learning in order to understand how to use them.

With a little bit of effort, I think that Open Hardware can become this easy. We are reaching a critical mass of expertise and energy, the cost of embedded computing continues to drop, and more and more valuable systems components and design patterns are emerging. There is a chance to transition from monolithic to modular contributions, enabling open hardware to cross a threshold into the production of complex, systems-scale artefacts. This would have ramifications wherever we design and build hardware; from toy-building to energy infrastructure and medical equiment. Success could mean that:

- Individuals and firms would be able to build new mechatronic equipment as easily as they write new software today (in weeks, not years).

- More individuals would be engaged in the active making and maintenance of our world: more meaningful jobs and more ‘hands on deck’ to face meaningful challenges.

- Society as a whole would be better equipped to face radical changes: economic upheavals, pandemics, existential (warming) threats - by producing real, useful artefacts and infrastructures at a lower engineering cost.

When we take a cold-hearted look at the state of open hardware today, these three outcomes can seem fairly pie-in-the-sky. On the other hand, when we think of how many engineering-hours are saved with Open Source in software systems, and how many large firms have adopted OSS as a core practice, it is hard to deny that open access to a commons of hardware designs and design patterns would represent a vastly more efficient economy than the one we see in action today, where re-engineering is the norm.

I would also call upon the doubtful reader to consider that as recently as 2004, Butler Lampson reported that “a general library of software components […] is unlikely to work”3 - just a few years later, node.js emerged - which currently plays host to over 1.3 million such modular components used by millions more developers. And if Open Hardware seems ‘too dinky’ to face ‘real engineering challenges’ in the future, I would also point out that the worlds’ best cryptographic algorithms are Open Source (more eyes see more potential pitfalls), and that the whole Personal Computing revolution was founded by small groups of hobbyists working “for fun” on systems that basically just blinked.

1.1 Scoping the Discussion

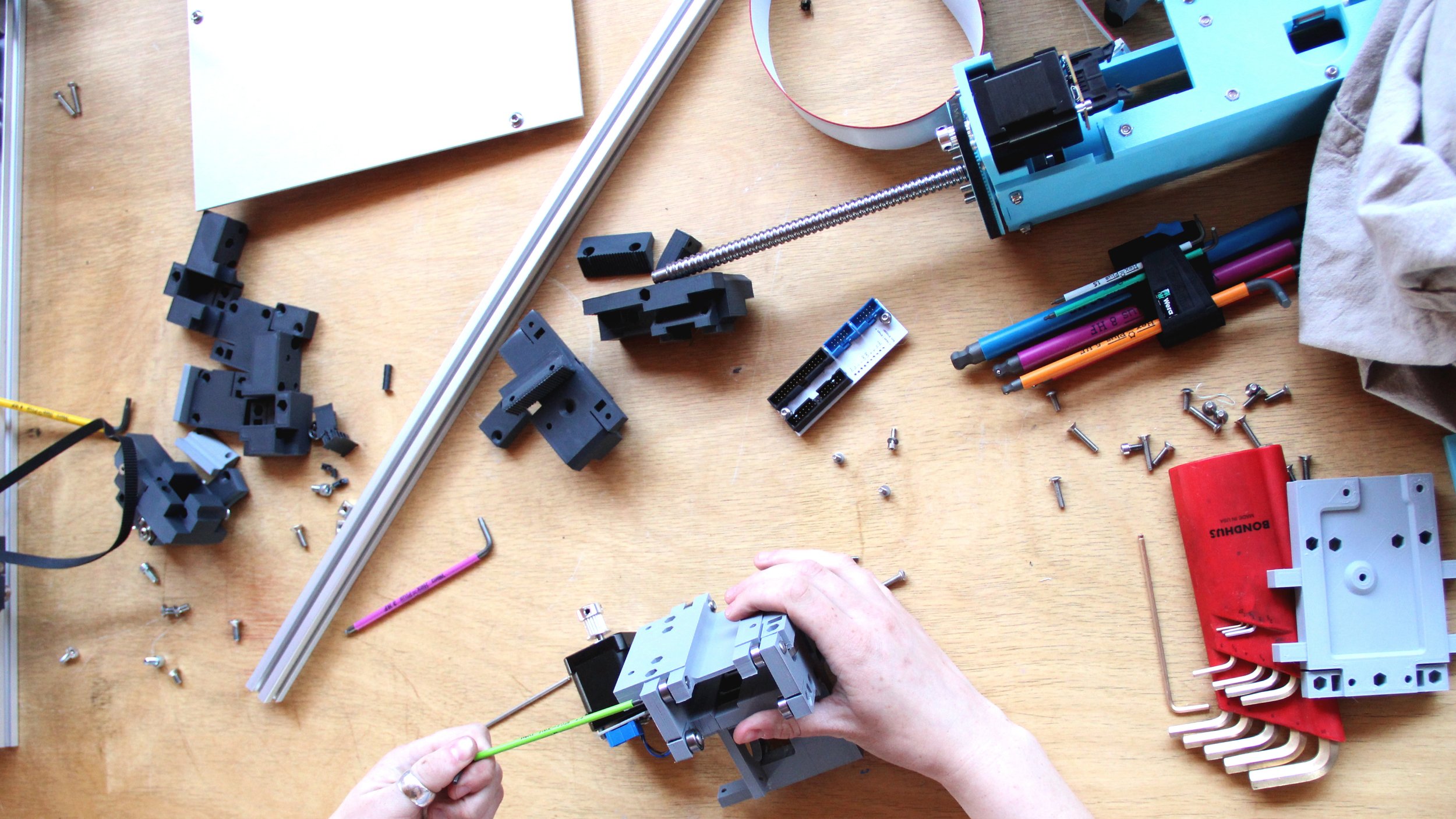

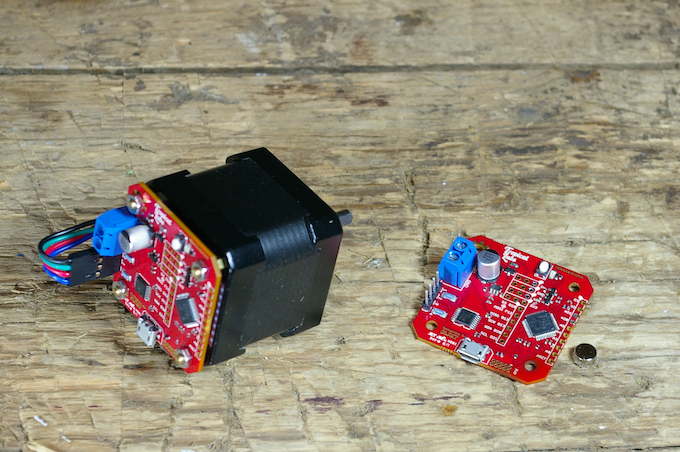

All of this is background to work that I do mostly in the area of machine building on modular motion controllers, feedback programming models, and networked systems assembly tools. When we talk about hardware it’s very easy to go off the rails, since the whole world is made of it. In this discussion, I want to stay focused on functional blocks of hardware, and since the world is filled with (and mostly operated by) computing these days, I’m mostly taking about mechatronic modules. These are components that we can imagine re-using in multiple contexts like motor controllers, encoders, computer visions solutions, end-effectors, etc; the things that are often the most valuable parts of a project, but are the most difficult to copy from someone else’s contribution. The Mechaduino is a great example:

1.2 Mechaduino: in Search of a Systems Integration Tool

when do we get to the point where, if I plug something in, it just works?

- Joe Jacobson (Lab Lunch, 2021)

Getting to this level of modular interoperability probably needs a new kind of systems integration (SI) tool, something like an NPM for Mechatronics. To explain what I mean by this, I want to remind readers of the Mechaduino. This is a closed-loop driver that represents a clear improvement in positioning accuracy, speed and reliability for one of the most common components in open machine design (the NEMA 17 Stepper Motor).

The mechaduino was an early OSH module that represents a significant improvement (open to closed loop) on motor control. However, since its launch in 2016, no open motion controllers can integrate with it appropriately.

This is a great example of a hardware module: it packages the developers’ expertise in a niche area (closed-loop control of a stepper motor) into a functional block for an application that many other developers are interested in (motion systems). But the Mechaduino was missing a larger architecture to live in; users were left to customize and re-compile the device’s firmware in order to integrate it into their application, and it didn’t serve a clear interface with which it could be “plugged in” to other systems. Although it was released in 2016, the mechaduino has not yet made its way into any other substantial open hardware project, nor has its core design pattern (although many clones exist, even I have designed a few!).

This is perhaps because closed-loop drivers are not natively supported by open motion controllers, or because (more broadly) we don’t have a good framework for projects that deploy on multiple microcontrollers (i.e. a motion planner talking to multiple modular motor controllers simultaneously). It may also be that the mechaduino is simply more expensive than its alternatives (at $65 vs ~ $20), despite my own anecdotal evidence of seeing many machine builders over the years spend above $250 per motor for proprietary closed-loop solutions. It seems that the mechaduino was a great module without a modular architecture to live in.

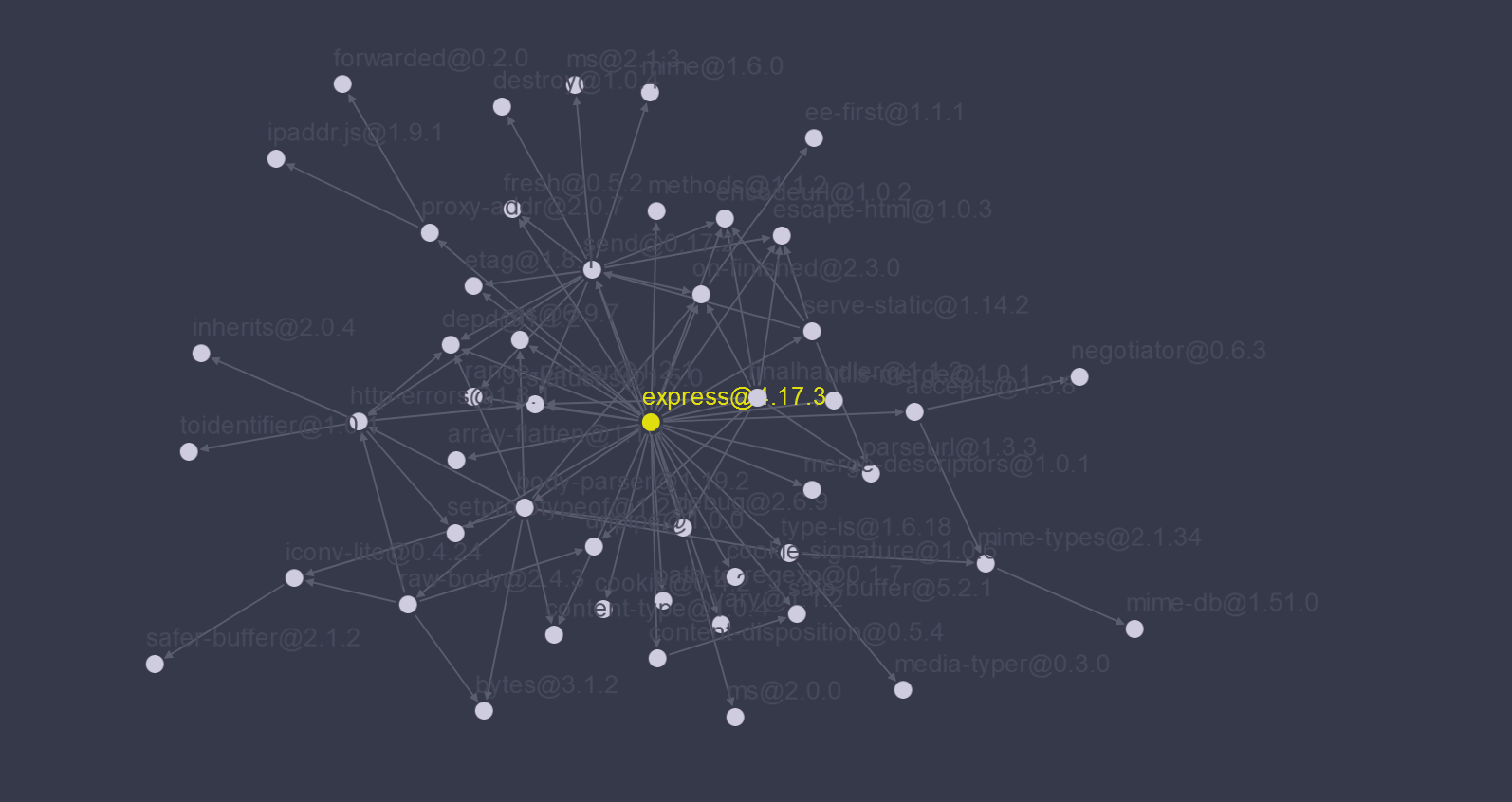

Rendered from the NPM graph viewer, the NPM package

express- a server middleware code - easily bundles tens of others` modular contributions into a new contribution.

If we look instead at Open Software, interoperability is the name of the game. Contributions are made of other contributions, and are all integrated seamlessly within some shared language: JavaScript or Python, for example. I think this is worth a second thought: programming languages are systems integration tools. Any function written in Python can be called natively in anyone else’s Python script. This is obvious, but it is also Open Software’s super power: between high-level languages (with standardized self-documentation practices to boot), and the operating system (which abstracts hardware heterogeneity away from systems assemblers), anything written anywhere can be deployed anywhere else. Open Hardware will have to work harder for interoperability.

1.3 For Reference: IBM’s Open Hardware Mistake Was a Silicon Valley Catalyst

Interoperability is also the basis of computer hardware at large - servers, PCs, etc are all made from components that work together based on well defined standards. It’s an economic pattern that we take largely for granted, but which has a worthwhile origin story.

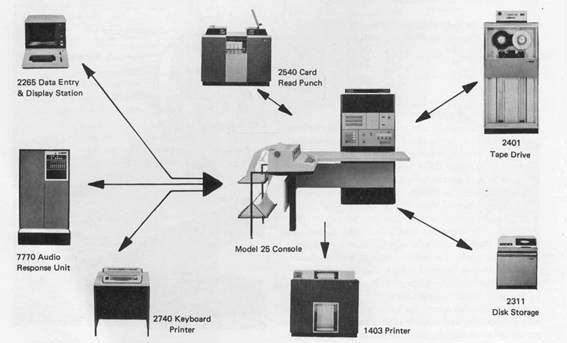

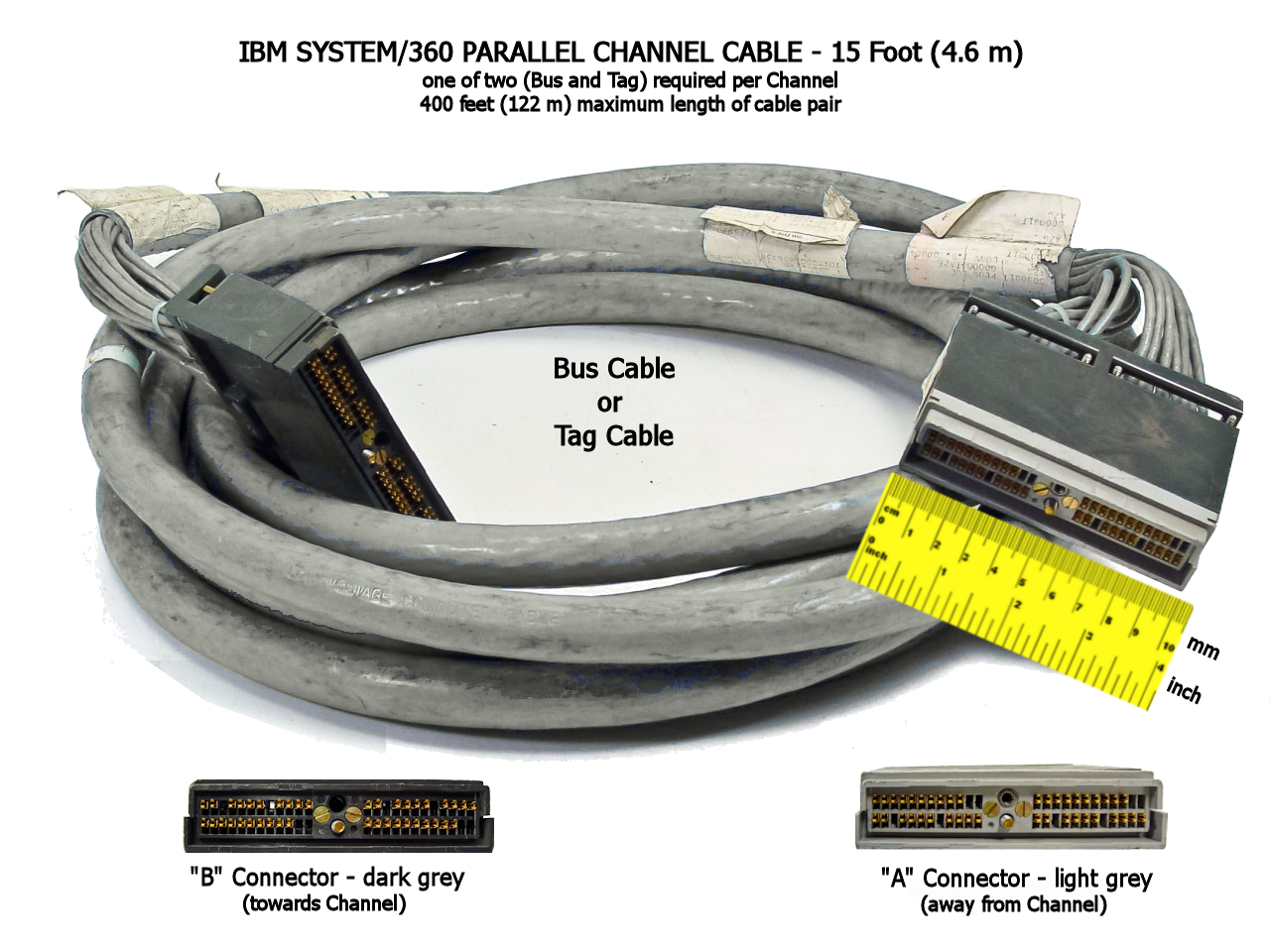

In the 1970’s, IBM’s flagship mainframe was the Systems/360 - and it represented the first computing system to intentionally generalize across markets: the 360 was used in business and scientific applications alike. It was also one of the most complex systems IBM had ever developed. A modular systems architecture helped them take on both challenges.

Images from IBM. System/360 was an early mainframe computer that paved the way for modularity throughout the IT sector.

Internally, modularity was a wonderful engineering-labour-coordination trick: it meant that when someone in the user-input team made a change, it wasn’t coupled to any design choices being made in the memory-control team4 (for example). Today, this kind of separation of interests is commonplace in systems design, but the 360 was emerging in a world where prior art was mostly monolithic, and where the first nuggets of information hiding theory and abstracted programming languages were still just emerging5.

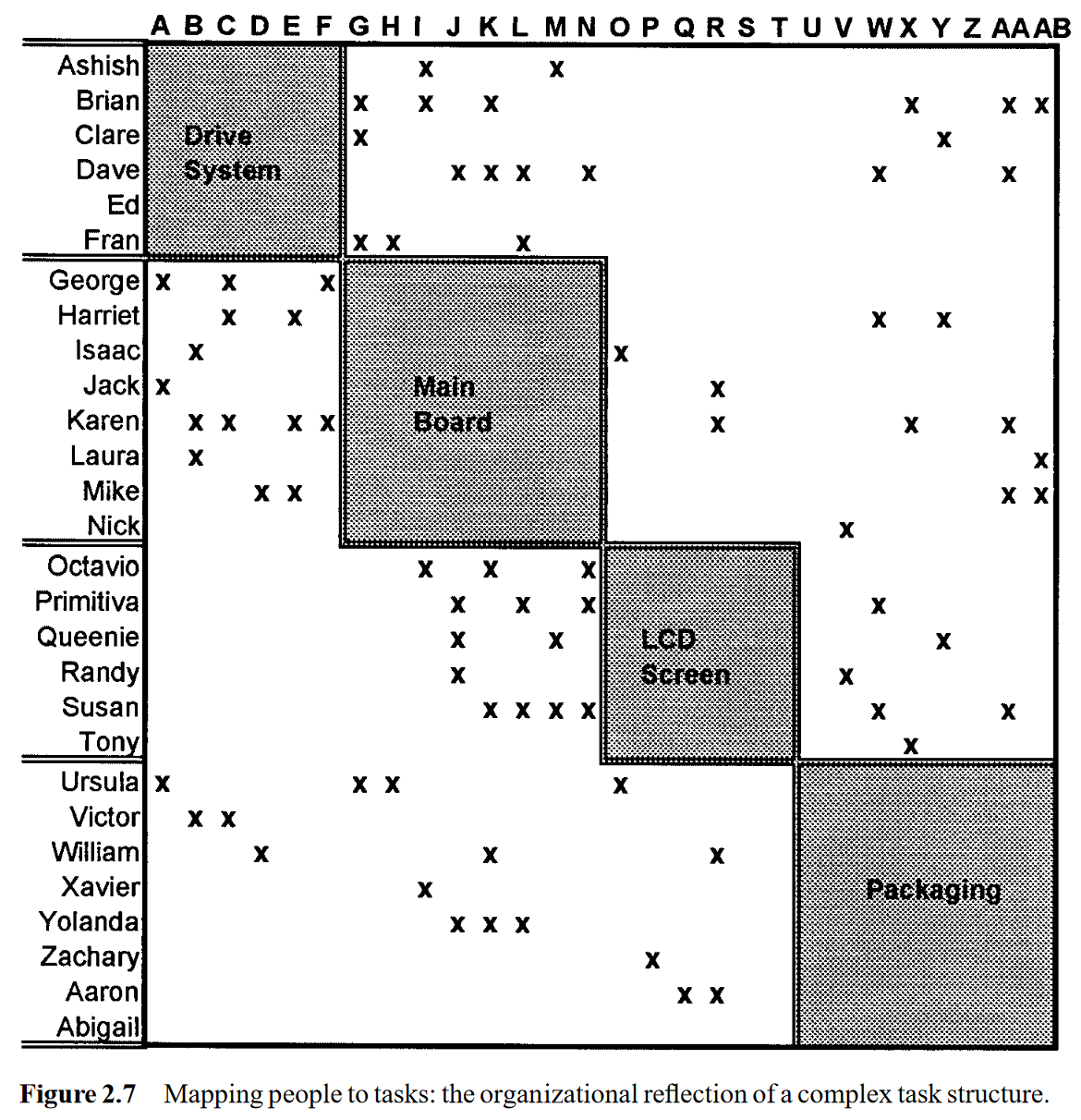

Image (and much of this sidebar) from Design Rules Vol. 1. Figure is of a Design Structure Matrixref that relates tasks (and designers) to one another. Tasks are enumerated on both axes of the matrix, and where two tasks constrain one another we mark an x. A design task is modularized when we can diagonalize that matrix to some high degree.

Externally, modularity allowed IBM to sell their mainframe to a wide selection of customers. Consumers of computing at the time were highly sophisticated users (banks, universities), who hired groups of staff to run their machines - not unlike the current state of the art with industrial Digital Fabrication - these users wanted to be able to modify their equipment, and understand it. So, IBM also released the 360’s instruction set, bus architecture spec, and the busses’ plug design.

Image via WikiPedia contributor Tom94022. System/360 was an early “plug and play” architecture.

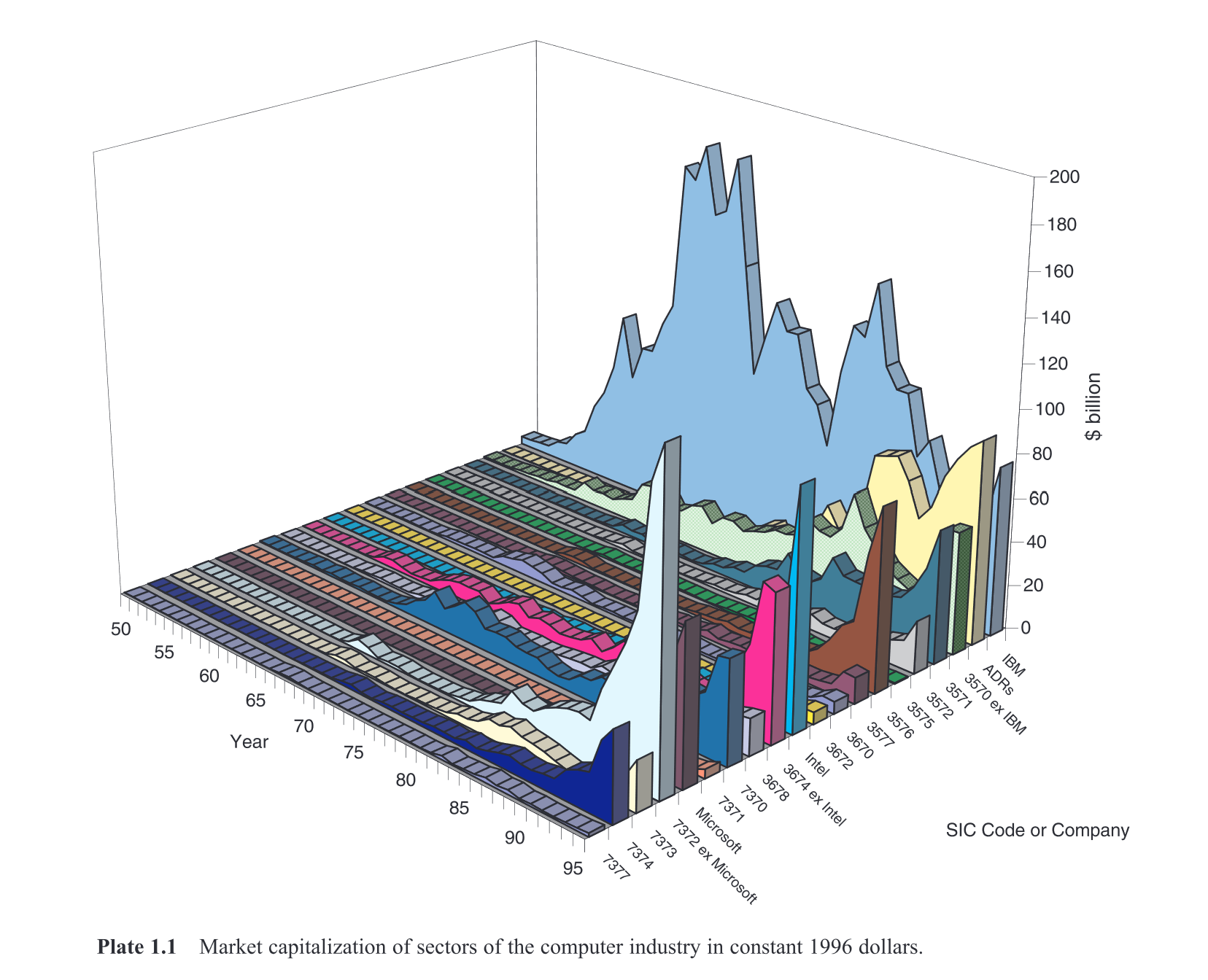

This has some real inlkings of the so-called platform economy,6 that emerged decades later, but IBM forgot to do the lock-in part, bless their hearts. As a result, their market share tanked - as tens of other high-tech competitors emerged.

Image from Design Rules Vol. 1. When IBM released the System/360, their open architecture led to the emergence of many competitors, who ate up much of their market share in later decades. IBM`s share is in the back of this image, in blue.

This was bad news for IBM, but it was a major contributor to the historical bifurcation that made the Silicon Valley ecosystem into a world center for innovation in the following decades. Baldwin and Clark describe the resulting situation well:

“Our theory is that modular designs evolve via a decentralized search by many designers for valuable options that are embedded in six modular operators.”7

Basically, we got into a situation where all of these (now big-and-fancy, then small-and-scrappy) firms were effectively working together to improve one modular system: the modern computer. Parts were interchangeable, individual contributors could focus on highly specialized parts of the whole, and the systems still clicked together and worked - even though very few of the specialized engineers even knew who the others were, or how their counterparts’ contributions worked.

The System/360 modularity lives on in today`s (mostly consortia-standardized) interoperability layers. Engineering Rules covers their development, maintenance, and dissemination.

Over time, the underlying architectures mutated as well, and new ones popped up. The particular design of the System/360’s architecture didn’t survive beyond the mainframe’s existence, but the design pattern survived and improved, and it continues to do so8. It is still possible to build your own computer using graphics cards from AMD or Nvidia, CPUs from AMD or Intel, memory from tens of different vendors, etc etc etc - all of this happens because this modular architecture is still with us, today in the form of tens of different specifications: DDR, PCIe, USB, … Standards that are developed and maintained by numerous consortia and working groups, which (if you’re into that kind of stuff) is explored in depth in Yates and Murphy’s Engineering Rules9.

Economists all basically agree that this situation is universally beneficial: there are minimal re-engineering efforts, there is less monopolization, and we end up building better-performing systems overall. However, it seems difficult to get “into the groove” of it, so to speak: it requires large activation energy or, in the case of the Systems/360, it requires that a major incumbent makes a strategic misstep.

There’s of course more to the info-tech story than I am presenting here, especially in the decades of work spent building the technologies that bring modularity to computers (compilers, languages, ISAs, etc) - as well as this story on ‘unbundled software’ - inall, I think that the resulting economic setup is fascinating - even some kind of a miracle - and it provides a good backdrop for the rest of this discussion.

2. Commons Based Peer Production

On top of all that interoperable hardware, we eventually built Open Source Software (OSS), and since the late 90’s, economists and social scientists have been trying to figure out how it works. Not how the code works, but how the people coordinate themselves to work on the code.

The first mystery was that highly talented individuals would give up their valuable time and energy to build free things, and the second (larger) mystery was how they were able to build complex things without central coordination. Software like Unix, Apache and LibreOffice was normally built using hierarchichal coordination within a firm, but then suddenly there were these FOSS nerds doing it on message boards without any real bosses. Sounds cool, but it didn’t make any sense in the old models.

The mystery has since been mostly solved, though the landscape continutes to evolve. Economists1011 came to a few conclusions:

(1) People are naturally motivated to build stuff that they’re interested in.

(2) People are excited to share those things that they’ve built, and see their contributions realized in the world.

(3) Radically open “labour markets” like those existing in OSS projects (where anyone can work on anything) do a better job of matching interest and talent to problems that need solving (or that require invention, etc).

So, Open Source turns out to be a really, really good way to build things that require specialized skillsets - like Operating Systems or, perhaps next up, robotic systems. It also requires good plumbing so that individuals’ niche contributions can easily be assembled into complete systems.

The most revelatory book I read on the topic (Working in Public by Nadia Eghbal) 12 compares OSS to social media, showing that folks are also motivated by clout, and that most software these days is “open by default” (whereas earlier ethos were more evangelical131415). It also cites Commons Based Peer Production (CBPP, a theory developed by Yochai Benkler 10) as the working method behind OSS. CBPP is “a form of collective intelligence”16 that most software developers will these days be well acquainted with. In CBPP, there exists a large collection (a commons) of generalized, functional building blocks (libraries and packages) that can be easily integrated into new, specific applications (using APIs and high-level languages).

Commons Based Peer Production is an economic mode of production linked to a practice of systems development (pictured here), where specific applications are made from a Commons of generalized functional modules. CBPP is prevalent (and powerful) in Open Software, but relies on technical infrastructures that are somewhat difficult to replicate in Open Hardware.

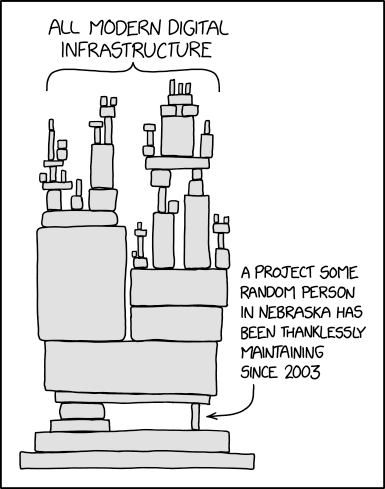

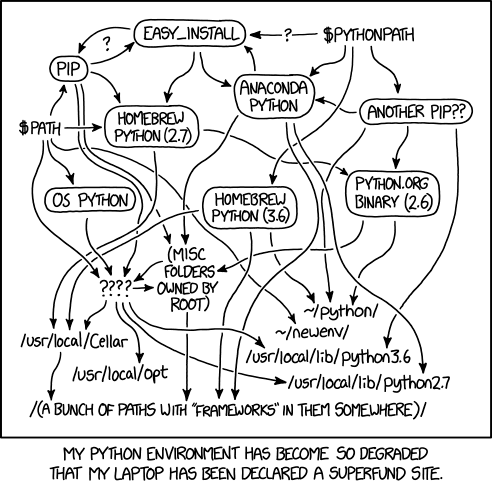

These commons are wonderful repositories of human intelligence, but not without their flaws. In particular, it is difficult to regulate the quality of their contributions, especially when contributions are made in multiple tiers (dependencies of dependencies, etc…). There are horror stories of singular, simple packages breaking entire swathes of the internet. That said, packages are chosen by intelligent developers, use-base consensus tends to emerge as to which are most useful, and safety and stability is part of that selection process.

XKCD: Dependency and XKCD: Python Environment. The systems that make up CBPP have many flaws, but its overall usefulness and value is clear to anyone who writes software.

CBPP also stands in contrast to earlier Open Source ethos’ in its positioning; proponents of the 1990’s era movement supposed that legions of OS developers would build prevalent end-user facing products (word processors, operating systems etc) that would liberate end-users from for-profit software companies. “Why pay for code when incremental costs are zero?” was a sensible and courageous stance that didn’t pan out: Microsoft Word still Reigns Supreme.

While LibreOffice developers and their ilk were on a valiant mission to deliver free software to the people (but sort of failed), the current paradigm of CBPP has just about everyone on earth consuming Open Source contributions without their even knowing it - and it seems to have done it accidentally: as a by-product of developers just building better components for one another to use.

Early models of Open Source (left) supposed that large groups of developers would work together on large, end-user facing products like word processors. The dominant, emergent model (right, Commons Based Peer Production) is one where small groups of developers work on small, functional building blocks (libraries and packages) that are accumulated in commons (like PIP and NPM) and subsequently re-assembled by other developers into end-user facing products. This has the effect of freeing Open Source developers, who are normally motivated by personal interest, from building “the boring components” of any given system (like UIs, user manuals, etc) and allows them to make contributions on “fun” parts like advanced algorithms, architectures, data structures, etc.

Open Source turns out to be better at producing infrastructural codes (server operating systems, network protocols, security algorithms) and generalized software modules (databases, mathematic routines, graphics tools, form validation…). This is partially because those are the fun problems - and OS developers prefer the fun problems - and also because none of them require OS developers to author much in the way of user-facing documentation. Or, rather, that their users are other developers, and a community of practice and common knowledge means that documentation can be minimal.

This is another moment worthy of a second thought. At the moment, most Open Hardware projects need to be documented up the wazoo. When I deployed clank! for HTMAA students in 2020, I had to explain to everyone what a socket head cap screw was, what a NEMA 17 motor is, etc etc; we have students who have never held a power drill. Documenting machine kits to a level that everyone could be successful was a huge undertaking, one that I haven’t had the time to do since then, even though I have improved designs. Were it the case that we had a recognizeable community of machine builders (and it is emerging), who shared contributions in a collective practice, the documentation burden would be much smaller. This is all just to say that speaking to a topic expert about their topic requires far fewer words: and that is effectively what happens in Open Software and what could be happening in Open Hardware. Two programmers need only explain their APIs to one another, not how to instantiate an object, how to call a function, etc etc…

… OK, we were talking about how Open Source is consumed like a public good. Well, those infrastructural codes and modules we were talking about, those are widely used by other developers who package them up into application-specific user-facing products that they often make a profit on. This might seem like a devilish take for the Open Source evangelicals among us, but they should consider just how little most software costs, and just how many niches it is able to fill. Good software would be more expensive and less widely applicable if it were not written on the back of the commons. As Nadia Egbhal relates, “Open Source is produced like a commons but consumed like a public good.”12

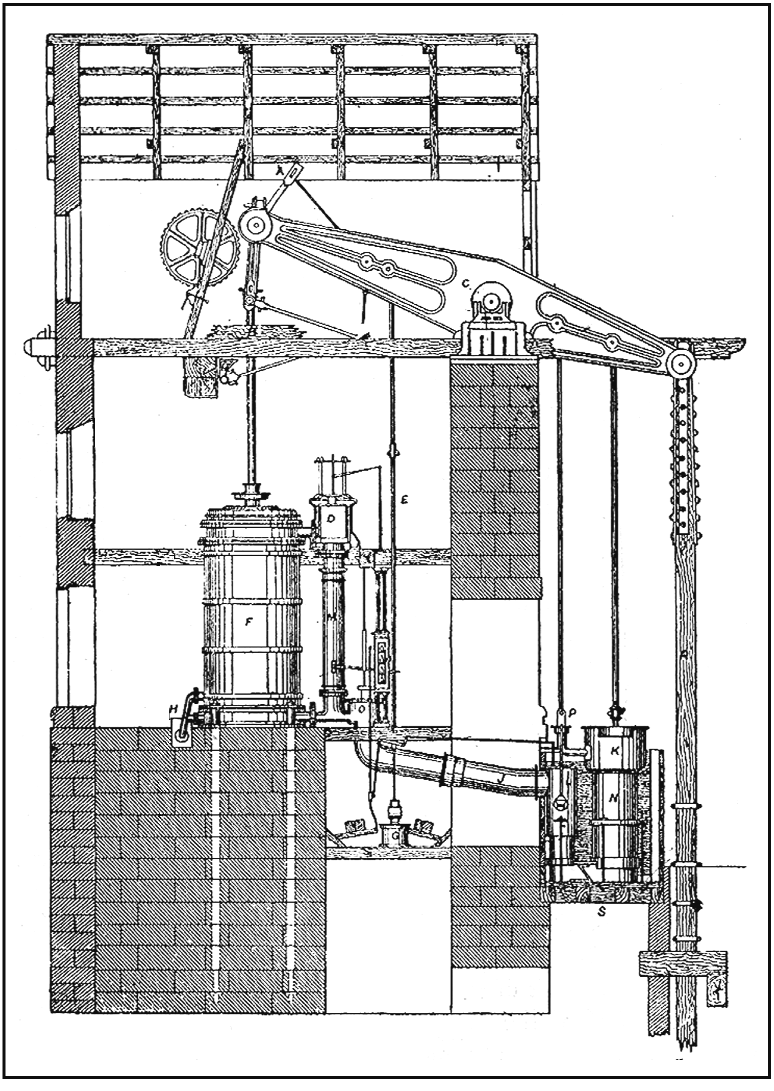

Along this tangent is a field of research on information commons writ large (like Wikipedia) of which OSS is one of many, and of which OSH would be one as well (itself not really being made up of hardware, but of hardware designs). For background in that domain we have i.e. Elinor Ostrom1718 and Lawrence Lessig19. There is also fascinating work on how open-ness (as in, information sharing) helped to advance the design of steam engines in the very early industrial revolution220, as well as Eric von Hippel’s famous exploration of Open Source patterns in extreme sports and other hardware21, and Baldwin and Clark’s really-super-neat work on modularity in the hardware of information systems7 (i.e. computers) whose development they describe as another form of collective intelligence16. Finally, we shouldn’t forget that much of the hardware world is already made from a commons of standardized components; this is well documented in Yates and Murphy’s Engineering Rules9.

2.1 Design Patterns Proceed Direct Integration of Designs

So, Commons Based Peer Production is a wonderful framework to understand how our technical world can be built out of a kind of collective intelligence, but it mostly explains how direct commons operate once they have been established. Since this little essay is supposing that OSH would be helped along by establishing more of these types of commons’, we want to understand also how they emerge.

To differentiate, we call a direct commons any identifiable collection of designs - these are concrete, dimensioned, rigorously specified drawings, source codes, schematics, circuit layouts, documents, etc: these are all contributions that can be immediately put to use. Before these emerge, we can identify commons of practice. These are just as real, but they are made up of design patterns; ideas about how to generally go about making a thing. This might include sets of kinematic arrangements like corexy, belt tensioning strategies, embedded programming patterns like static memory allocation, etc. Design patterns could probably be conveyed successfully in conversation, or on a whiteboard, but designs require more tooling: dimensioned drawings, file formats, etc. Between direct commons and common practices, the latter are probably more important overall, but they’re squishier: harder to delineate and measure. They also tend to emerge as pre-requisites.

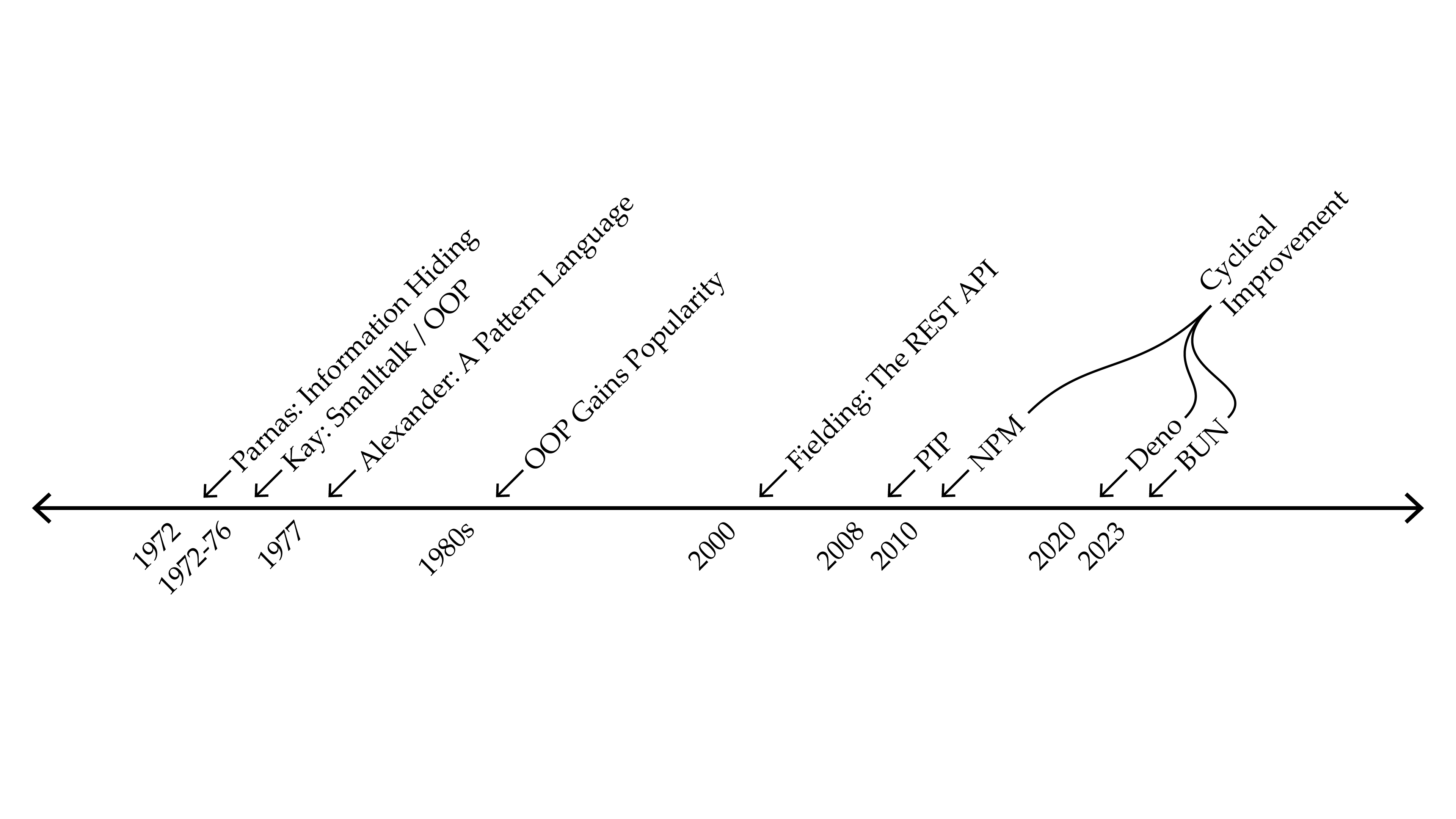

I like to take Object Oriented Programming (OOP) as an example. The idea has been around for nearly half of a century, kicked off with Parnas’ ideas about information hiding2223, and popularized by the likes of Christopher Alexander24. So, it was in the soup - and then formalized into handfuls of programming languages. Without this idea and the associated ethos (encapsulation, modularization, organization of function), we wouldn’t have anything like PIP or NPM, whose users deploy this design pattern without thinking twice about it.

Design Patterns emerge first, and last longer than literal Designs themselves. Common understanding of a set of Design Patterns is normally a pre-requisite for the emergence of a Direct Commons.

This is all to say that need a collective understanding of how our shared systems work before we can succesfully use them; NPM requires OOP and APIs which require a broader idea of information hiding. Open Hardware might need some new (or more practice with) design patterns as well: networking, concurrency, asynchronousness, reflective programming, feedback systems, interface design, etc.

For more thinking on shared design patterns, consult Nuvolari2 and Allen20 who showed that openly disseminated plans for steam engines (at left in the figure above) formed a backbone of the English industrial revolution. Eric von Hippel21 explores how design patterns bounce around in communities of users, and looks in particular at how innovation happens ‘on the ground’ in niche extreme sports (at right).

2.2 Commons Emerge Around Systems Integration Methods

Once a common practice is established, there is viable grounds on which to build a direct commons: a place where literal, immediately-useful designs can be shared. But there is one more component needed, which is some organizing integration method or deployment tool.

This notion really struck me when Prusa renamed their design-sharing site to just “Printables” - it’s a commons made up of designs that can be deployed immediately by any member of the community who knows how to use a 3D Printer.

Things you can use in your JavaScript program.

Things you can use in your Python program.

Things you might be able to use in your Arduino project.

Things you can Print!

These all have some caveats, mostly because users’ actual systems are all a little different: i.e. some Printables Prints will only work on multi-filament machines, many Arduino libraries are not compatible with all Arduinos25, and NPM and PIP packages will grant variable milage depending on their users’ setups: typescript-or-not, esm-version, dependencies… The world is heterogeneous, and commons-es require some homogeneity, but luckily not absolute homogeneity.

And that’s the point here: a stable method of integration provides the homogeneity required for a technical commons to emerge. NPM and PIP were built on the back of JS and Python’s ubiquity, Printables on the ubiquity of the FDM printer. Those are simple examples, but Open Hardware in general is an umbrella of many other disciplines, each of which has variable integration systems (most of which are either ad-hoc or proprietary). But, it also points the way: if we can make good systems integration tools for various sub-disciplines in hardware, we stand a better chance at building in the commons.

Thinking about this might also help us identify missing commons. For example, everyone is basically agreed on how to draw a schematic for a circuit, and how to render and share design files (with Gerbers). BOMs, also, are relatively easy to standardize. GitHub is (so far) poorly suited to host these types of content because it doesn’t have built-in tools to render them and because some of the formats are difficult to track using git’s versioning system. However, kitspace has emerged as a commons for circuits and it provides the utility of automatically ordering boards and BOMs of anything contributed there.

3. Technical Properties of the Commons

So, we see that together with a set of ubiquitous design patterns, systems integration tools enable commons’ of directly shareable designs to emerge. For modularity, we need modular systems architectures. A quote from Benkler is worth our time here:

The remaining obstacle to effective peer production is the problem of integration of the modules into a finished product. […] In order for a project to be susceptible to sustainable peer production, the integration function must be either low-cost or itself sufficiently modular to be peer-produced in an interative process.

From Coase’s Penguin, or, Linux and ‘The Nature of the Firm.’10

Researchers who study these tools note that many of them exhibit similar properties, each of which gets a sub-section here.

3.1 Modularity

This property is pretty obvious and tautological; commons’ are made of modular bits. Hidden behind this is the more complex requirement for a module to generalize - even if we make a stepper motor driver, say, if it doesn’t generalize across machine and robotics and home automation applications, we don’t have a very good module. Hardware modules have the added challenge of scaling across applications: the motor we want for an excavator is a very different one than we want for a surgical robot, so even in domains where functional blocks can be abstracted using similar descriptors, their hardware instantiations will still need to be wide-ranging. This one of the reasons why I’m also trying to build self-tuning motion systems, to automatically align generalizeable, abstract models with the real world’s heterogeneity.

Modularity always poses a challenge to interface and API designers: how to expose enough of a component’s functionality so that it can be altered for multiple uses, while not over-burdening users with configurations. It is normally impossible for module designers to foresee all possible uses of their contribution, so API design remains something of an art; it is also a domain that will get many software engineers hot under the collar… strong opinions abound, and there are not many rigorous methods available with which to evaluate them.

3.2 Granularity

One of the core tensions of modular systems design regards the size of a module. Larger modules can be more performant and faster to write for a specific system, but they tend not to generalize as well into components for other applications (complexity tends to go hand-in-hand with specificity). Larger modules also require more time up-front to understand and edit, and can be harder to modify down the road; technical debt is commonly found in the form of ad-hoc, monolithic chunks of code that are difficult to decipher.

Successful commons’ tend to be highly granular, meaning they are made up of many small contributions rather than a few big ones. This has the technical advantages of comprehensibility and extensibility but it also has the economic function of breaking complex work into smaller chunks of labour. CBPP theorists point this out as an important feature because most contributors to Open Source do so “on the side” - meaning that they don’t have the weeks and months of time required to author large, complex contributions. Instead, they have nights and weekends and tend to make small additions at a time.

3.3 Minimal Burden of Integration

This is perhaps the most important property of a succesful commons: it should be very easy to contribute a new module to the commons, and it should be very easy to deploy a module into an application. This extends to the perhaps unintuitive notion that, in order to use someone’s module, we should not have to understand it, which is where the notion of collective intelligence16 emerges. If we can use the outputs of one anothers’ intellect without comprehending it, the systems we build from those components can embody an intelligence greater than our own and we get to keep some left-over brainpower with which to make our own new and specialized contribution.

We’ve talked about how easy module integration is with software, where programming languages are de-facto systems integration tools and modular design patterns are already diffused in the discipline of computer science. That baseline has been aided as languages have added first-class support for module importing to further reduce the burden of integration, like ES6’s import statement (that was originally a node.js kludge). In languages like C++, where no language-level import statement has existed (until recently), no ubiquitous package manager (or commons of libraries) exists.

The arithmetic on why this metric is important is pretty simple; developers weigh the time it takes to contribute their module to the value they get from contributing it. Where incentives are small, the time required needs to be small. If developers don’t contribute, the commons doesn’t grow. Similarely, if it’s difficult to include someone else’s work, users are more likely to write things from scratch - meaning they will build less of the new stuff, and our collective ability dwindles.

Along these lines, I recently pushed an update to Modular Things that makes it easier to build high-level computing interfaces for hardware modules, in an effort to lower the barrier for device-to-device integration (by allowing folks to “do it in software”).

People are also not likely to use an ecosystem of components when plugging into that ecosystem is difficult, or when large changes to their existing project are required in order for them to interface with it. This is why the start-up cost of these commons’ is often quite high. We need to build out enough of the systems such that developers will be incentivized to use it as a first-class citizen in their projects. Or, systems need to be simple and flexible enough that they are easily added to existing projects.

4. To the Commons !

So, this ends Part One. I hope you’ve seen how the commons can help us build complex and meaningful technologies using open contributions, and how they emerge and grow - and what we need to build to enable them.

Perhaps you are thinking “gee, much of OSH is already looking like this” - and you would be correct; I plan to explore that topic next in more detail next. I think our current state is modular-ish, but that we haven’t accomplished true ‘plug-and-play’ interoperability that lets a commons flourish.

I want to return to this central idea: OSH is trying to build end-user facing products with a lot of re-engineering. Instead, we should look towards modular systems, and build a commons of interoperable parts. This would let us build a more diverse set of more complex artefacts that are easier to use and understand.

But I started by considering the plight of Open Hardware firms, who are still relatively plucky (vs. their Open Software counterparts, and vs. the industrial incumbents who dominate our economy). A lot of the people I talk to want to launch these kinds of firms. They want to build stuff, to do R&D, maintain a shop, make meaningful contributions and share them in the open. This means they effectively want to donate their expertise as a public good: free and useful information. They just want to do it sustainably, and they probably want a little bit of clout, as a treat - and as they are warranted.

I think that governments, businesses and academic institutions aught to be listening to them, and that we should all be trying to figure out how to enable their dream; these are talented individuals whose other door opens into giant tech salaries and NDAs with businesses that dont pay their taxes. It would be a shame if we let this generations’ engineering talents continue to build up those walled gardens when we have such a promising opportunity to divert them into a shared pool of collective intelligence and wealth, and so many meaningful problems to solve.

I think that building the commons is where it begins, but it is for sure not the whole picture… next up is a discussion on the current state of modularity, then a look at device-to-device integration systems, and finally I’ll explore some forwards-looking economics for the commons.

Notes / References

-

Consumers are also incentivized to to purchase from original developers because they know they are getting a product that has been manufactured to the original intent, whereas third-party vendors occasionally use i.e. off-brand chips that cause compatibility issues, or otherwise cut corners. ↩

-

Nuvolari, A. “Collective Invention during the British Industrial Revolution: The Case of the Cornish Pumping Engine.” Cambridge Journal of Economics, vol. 28, no. 3, May 2004, pp. 347–63. DOI.org (Crossref), https://doi.org/10.1093/cje/28.3.347. ↩ ↩2 ↩3

-

Lampson, Butler W. “Software components: Only the giants survive.” Computer Systems: Theory, Technology, and Applications. New York, NY: Springer New York, 2004. 137-145. ↩

-

Today, researchers use Design Structure Matrices to study these kinds of couplings. DSMs are also one of the only quantitative manners I have found to measure modularity, and they’re a useful thinking tool. For more, there’s Alan MacCormack’s Exploring the Structure of Complex Software Designs: An Empirical Study of Open Source and Proprietary Code. wherein the structure of Mozilla Firefox is analyzed in the context of Open Source (Firefox’s authors were preparing to OS the code, and wanted to do a modular refactor beforehand; they understood that the code would only be successful as an open project were it made modular) and similar work: The Impact of Component Modularity on Design Evolution. ↩

-

The first notion of information hiding that I’ve found is Parnas’ (1972) On the criteria to be used in decomposing systems into modules, also-interesting is his (1985) The Modular Structure of Complex Systems. Since we’re on the topic, I think the first paper is a fascinating read in the context of hardware vs. software modularity because it reminds us that compilers remove modularity when they optimize a program… this seems to be a good reminder that modularity, which seems to easy in computing systems, actually tends to come with a huge performance cost. We get away with building modular systems in software because we have automatic translators (compilers) between bespoke systems (code as compiled) and the systems representations we use to design them (modular codebases). Bespoke things are faster. Everyone knows this, it’s why bespoke or proprietary is synonymous with hot, fast and sexy. In hardware, we have to reckon directly with the thing itself, and live with the real-world costs of our modular systems descriptions. Maybe. ↩

-

This is hot in the business science world, or it was a decade ago. See i.e. Martin Kenney’s (2016) The Rise of the Platform Economy. ↩

-

Baldwin, Carliss Young, and Kim B. Clark. Design rules: The power of modularity. Vol. 1. MIT press, 2000. ↩ ↩2

-

For example Intel, who championed the currently dominant instruction set (x86) was founded in 1968, two years after the System/360s release. We are already seeing a shift to ARM-based instruction sets, which itself should give way to RISC-V, an open-source ISA. ↩

-

Yates, JoAnne, and Craig N. Murphy. Engineering rules: Global standard setting since 1880. JHU Press, 2019. ↩ ↩2

-

Benkler, Yochai. “Coase’s Penguin, or, Linux and ‘The Nature of the Firm.’” The Yale Law Journal, vol. 112, no. 3, Dec. 2002, p. 369. DOI.org (Crossref), https://doi.org/10.2307/1562247. ↩ ↩2 ↩3

-

Lerner, Josh, and Jean Tirole. “Some simple economics of Open Source.” The journal of industrial economics 50.2 (2002): 197-234. ↩

-

Eghbal, Nadia. Working in public: the making and maintenance of Open Source software. San Francisco: Stripe Press, 2020. ↩ ↩2

-

Raymond, Eric. “The Cathedral and the Bazaar.” Knowledge, Technology and Policy, vol. 12, no. 3, 1999, pp. 23–49. ↩

-

Stallman, Richard. “Why ‘Open Source’ Misses the Point of Free Software.” Communications of the ACM, vol. 52, no. 6, June 2009, pp. 31–33. DOI.org ↩

-

Stallman, Richard. The GNU Manifesto. 1985. ↩

-

Benkler, Yochai, Aaron Shaw, and Benjamin Mako Hill. “Peer production: A form of collective intelligence.” Handbook of collective intelligence 175 (2015). ↩ ↩2 ↩3

-

Ostrom, Elinor. Beyond Markets and States: Polycentric Governance of Complex Economic Systems. Nobel Prize Lectures, 8 Dec. 2009. ↩

-

Ostrom, Elinor, and Charlotte Hess. Understanding Knowledge as a Commons: From Theory to Practice. MIT Press, 2007. ↩

-

Lessig, Lawrence. The Future of Ideas: The Fate of the Commons in a Connected World. “Chapter 2: Building Blocks” and “Chapter 14: Alt.Commons” 1st ed., Random House, 2001. ↩

-

Allen, Robert C. “Collective Invention.” Journal of Economic Behavior & Organization, vol. 4, no. 1, 1983, pp. 1–24. ↩ ↩2

-

Hippel, Eric von. Democratizing Innovation. MIT Press, 2005. ↩ ↩2

-

Parnas, David Lorge, Paul C. Clements, and David M. Weiss. “Enhancing reusability with information hiding.” Proceedings of the ITT Workshop on Reusability in Programming. 1983. ↩

-

Parnas, David Lorge. “On the criteria to be used in decomposing systems into modules.” Communications of the ACM 15.12 (1972): 1053-1058. ↩

-

Alexander, Christopher. A pattern language: towns, buildings, construction. Oxford university press, 1977. ↩

-

On the topic of messy-arduino-gotchas, I could go on for a while. While Arduino attempts to enable code portability across microcontrollers, it is a largely leaky abstraction: libraries compile on some devices and not others, libraries can collide with one another (when they both need to use the same UART or SPI peripheral, for example), and can also easily overflow any givne microcontrollers’ compute or memory resources. I think it boils down to the fundamental non-portability of embedded code. With microcontrollers, more than any other computing system, we need to align our programs with their physical situation: not just the particular MCU running underneath the Arduino layer, but also the circuit that the MCU is attached to. Arduino basically relegates this alignment-hunting to the CPP compiler, which is not made for such a task (compilers being purely feed-forward tools). Template programming might be able to do it, but that sounds like hell also. I remain convinced that doing Arduino’s job well requires a ground-up new-arduino, and probably something akin to a DSL, and probably something based on a more universal hardware description language for circuits. My buddy Erik is cooking something up along these lines, bless his soul. ↩

« Clangers and Bangers

Some Link Layer Speed Testing »